In a major escalation of transatlantic tensions over online speech, the U.S. House Judiciary Committee — chaired by Republican Rep. Jim Jordan — released a high-profile interim staff report in early February 2026. The document, titled “The Foreign Censorship Threat, Part II: Europe’s Decade-Long Campaign to Censor the Global Internet and How it Harms American Speech in the United States”, accuses the European Commission of systematically pressuring major social media platforms to suppress political content ahead of national elections across Europe.

The 160+ page report claims this activity, intensified since the Digital Services Act (DSA) took effect in 2023, has directly disadvantaged conservative and populist political parties while undermining democratic fairness. Drawing on non-public documents subpoenaed from companies like Meta, TikTok, and others, the Committee alleges the EU has engaged in a broader, decade-long effort to control global online discourse — with ripple effects that harm free speech even in the United States.

Key Allegations: Interference in at Least Eight Elections

The report specifically highlights pressure campaigns before multiple high-stakes votes, arguing that closed-door meetings between Commission officials, national regulators, left-leaning NGOs, and platform representatives were used to push for aggressive content moderation. According to the document, these efforts targeted narratives labeled as “disinformation” or “hate speech” — categories critics say often encompass legitimate political opinions, satire, memes, and debate on immigration, gender policies, and EU integration.

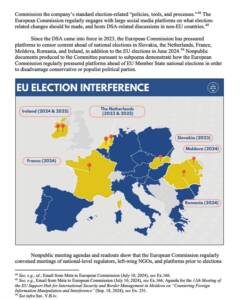

The Committee lists the following instances of alleged interference:

Slovakia (2023 parliamentary elections): Platforms reportedly censored statements such as “There are only two genders” or criticism of certain gender-related policies as “hate speech” under EU pressure.

The Netherlands (2023 and 2025 elections): Coordination allegedly included granting “trusted flagger” status to government bodies, enabling faster flagging and removal of content.

France (2024 elections): Pre-vote demands reportedly focused on moderating political discourse critical of establishment positions.

Romania (2024 presidential election): The report questions the annulment of initial results (over alleged foreign — primarily Russian — interference claims), noting TikTok reportedly found “no evidence” of coordinated foreign campaigns while suggesting EU-driven moderation shaped online narratives.

Moldova (2024 elections): Discussions centered on countering “foreign information manipulation,” with platforms pressured to act ahead of the vote.

Ireland (2024 general election and 2025 presidential election): The Irish regulator Coimisiún na Meán allegedly hosted “DSA election roundtables” with Commission officials and “biased fact-checkers,” creating what the report calls “censorship pressure.”

Additional references include the June 2024 EU Parliament elections and other national contests in countries like Poland, Spain, Belgium, and Germany.

The Committee argues these actions form part of a pattern where the European Commission convened over 90 meetings since 2020 under frameworks like the Code of Practice on Disinformation, evolving into mandatory DSA obligations. It claims officials issued “election guidelines” that, while presented as voluntary, functioned as a de facto compliance floor — with warnings that non-compliance could trigger massive fines (up to 6% of global revenue).

Broader Context: The DSA and Global Speech Implications

The Digital Services Act, enacted in 2022 and fully applicable from 2023–2024, requires very large online platforms to assess and mitigate “systemic risks” including disinformation, hate speech, and threats to electoral integrity. Critics — including the House Judiciary report — contend this empowers regulators to demand changes to global moderation policies, as companies often apply uniform rules worldwide to avoid separate regional systems and potential penalties.

The report frames the DSA as the culmination of efforts dating back to around 2015, when voluntary codes began pressuring platforms. It cites examples of moderation targeting COVID-19 debates, migration discussions, and transgender issues — content the Committee says is often factual or protected political speech.

A particularly contentious point is the extraterritorial impact: because American companies dominate major platforms, EU demands allegedly force global changes that “chill” U.S. users’ speech. The report points to recent enforcement actions, including the first-ever DSA fine against X (formerly Twitter) in late 2025, as evidence of this dynamic.

EU Response: Firm Rejection of Claims

The European Commission has categorically dismissed the allegations as “pure nonsense,” “baseless,” “completely unfounded,” and “absurd.” Spokespeople emphasize that the DSA empowers platforms to address real risks to elections, public debate, children, and democracy — without the Commission dictating content or interfering in national votes.

Brussels insists roundtables occur only at the request of member states, that platforms remain responsible for their own compliance, and that the framework targets illegal content and foreign manipulation (e.g., from Russia) rather than domestic political views. EU officials argue robust rules are essential in an era of misinformation and hybrid threats, protecting rather than undermining democracy.

Why This Matters: Free Speech vs. Electoral Integrity

The controversy highlights a deepening divide between U.S. and European approaches to online governance. Republicans on the House Judiciary Committee portray the DSA as a tool for narrative control and political favoritism, while supporters in Europe view it as necessary defense against disinformation campaigns that threaten democratic processes.

The report has already fueled hearings, diplomatic friction, and calls for U.S. legislative countermeasures to shield American speech from foreign influence. As platforms navigate conflicting pressures, the debate raises fundamental questions: How should democracies balance combating harmful online content with preserving open political discourse? And can one region’s rules legitimately shape global speech norms?

With ongoing investigations and potential policy responses on both sides of the Atlantic, this transatlantic clash over digital freedom shows no signs of resolution anytime soon.